I studied the correlation between rankings and content scores from four popular content optimization tools: Clearscope, Surfer, MarketMuse, and Frase. The result? Weak correlations all around.

This suggests (correlation does not necessarily imply causation!) that obsessing over your content score is unlikely to lead to significantly higher Google rankings.

Does that mean content optimization scores are pointless?

No. You just need to know how best to use them and understand their flaws.

Most tools’ content scores are based on keywords. If top-ranking pages mention keywords your page doesn’t, your score will be low. If it does, your score will be high.

While this has its obvious flaws (having more keyword mentions doesn’t always mean better topic coverage), content scores can at least give some indication of how comprehensively you’re covering the topic. This is something Google is looking for.

If your page’s score is significantly lower than the scores of competing pages, you’re probably missing important subtopics that searchers care about. Filling these “content gaps” might help improve your rankings.

However, there’s nuance to this. If competing pages score in the 80-85 range while your page scores 79, it likely isn’t worth worrying about. But if it’s 95 vs. 20 then yeah, you should probably try to cover the topic better.

Key takeaway

Don’t obsess over content scores. Use them as a barometer for topic coverage. If your score is significantly lower than competitors, you’re probably missing important subtopics and might rank higher by filling those “content gaps.”

There are at least two downsides you should be aware of when it comes to content scores.

They’re easy to cheat

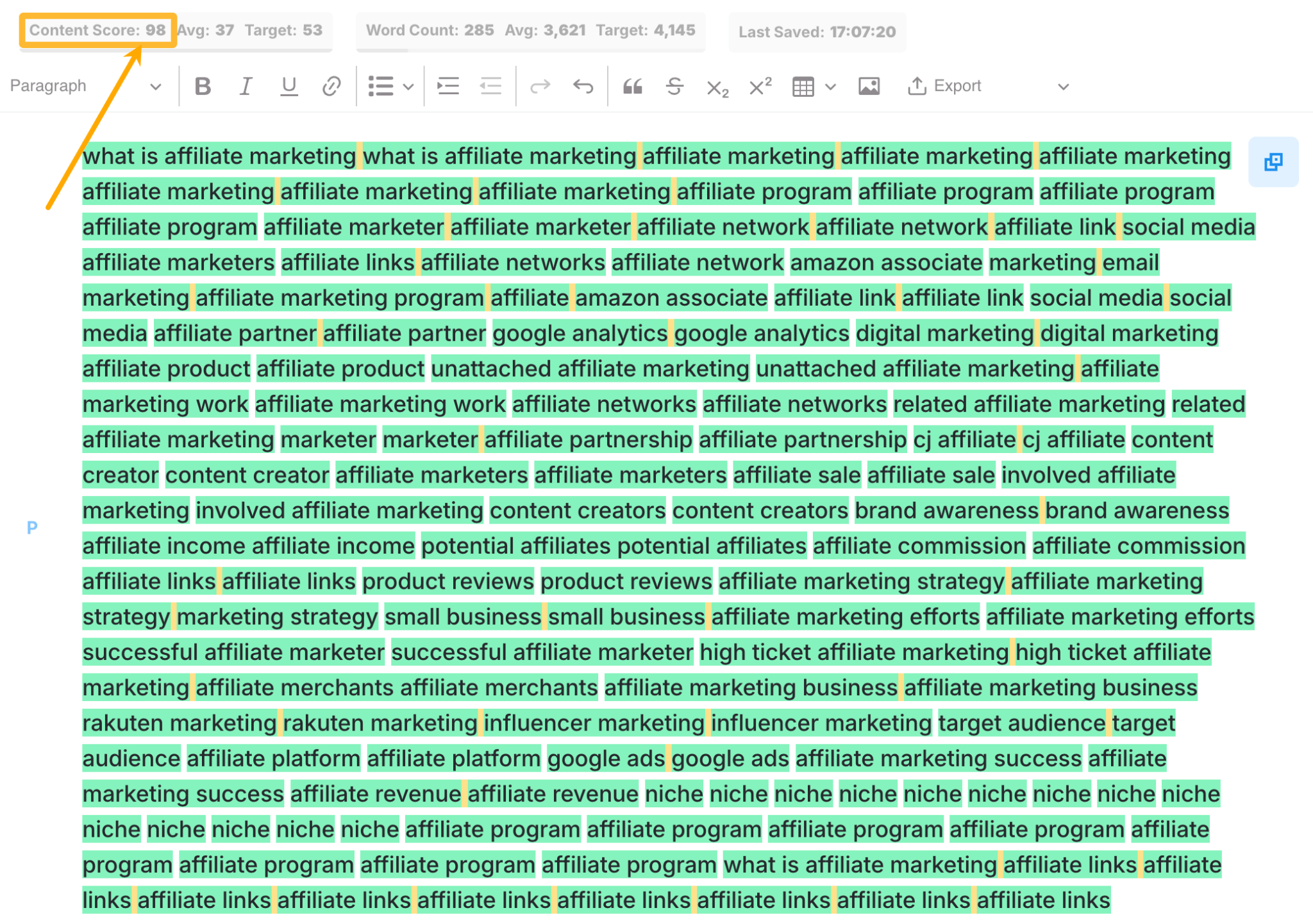

Content scores tend to be largely based on how many times you use the recommended set of keywords. In some tools, you can literally copy-paste the entire list, draft nothing else, and get an almost perfect score.

This is something we aim to solve with our upcoming content optimization tool: Content Master.

I can’t reveal too much about this yet, but it has a big USP compared to most existing content optimization tools: its content score is based on topic coverage—not just keywords.

For example, it tells us that our SEO strategy template should better cover subtopics like keyword research, on-page SEO, and measuring and tracking SEO success.

But, unlike other content optimization tools, lazily copying and pasting related keywords into the document won’t necessarily increase our content score. It’s smart enough to understand that keyword coverage and topic coverage are different things.

Sidenote.

This tool is still in production so the final release may look a little different.

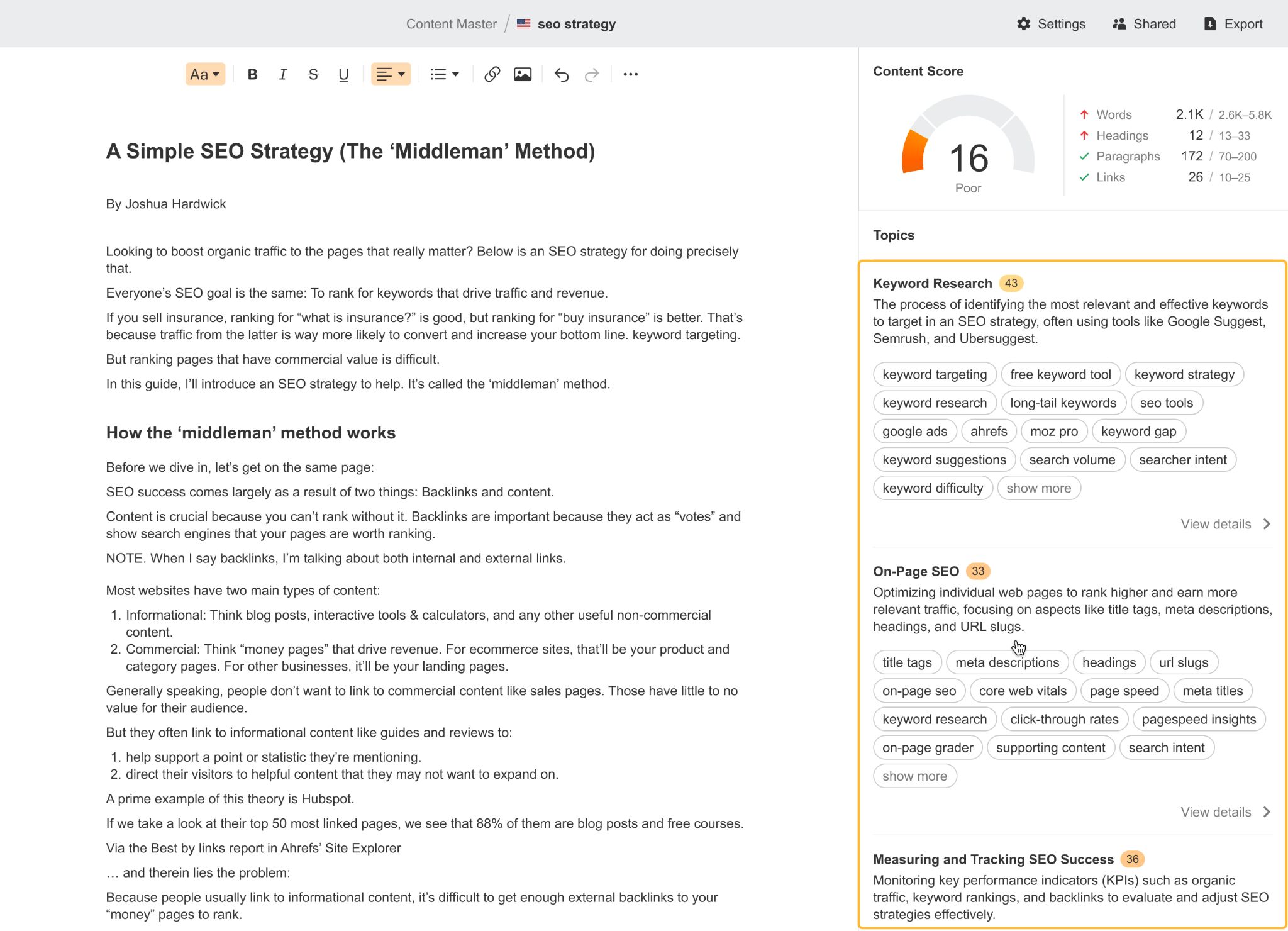

They encourage copycat content

Content scores tell you how well you’re covering the topic based on what’s already out there. If you cover all important keywords and subtopics from the top-ranking pages and create the ultimate copycat content, you’ll score full marks.

This is a problem because quality content should bring something new to the table, not just rehash existing information. Google literally says this in their helpful content guidelines.

In fact, Google even filed a patent some years back to identify ‘information gain’: a measurement of the new information provided by a given article, over and above the information present in other articles on the same topic.

You can’t rely on content optimization tools or scores to create something unique. Making something that stands out from the rest of the search results will require experience, experimentation, or effort—something only humans can have/do.

Big thanks to my colleagues Si Quan and Calvinn who did the heavy lifting for this study. Nerd notes below. 😉

- For the study, we selected 20 random keywords and pulled the top 20 ranking pages.

- We pulled the SERPs before the March 2024 update was rolled out.

- Some of the tools had issues pulling the top 20 pages, which we suspect was due to SERP features.

- Clearscope didn’t give numerical scores; they opted for grades. We used ChatGPT to convert those grades into numbers.

- Despite their increasing prominence in the SERPs, most of the tools had trouble analyzing Reddit, Quora, and YouTube. They typically gave a zero or no score for these results. If they gave no scores, we excluded them from the analysis.

- The reason why we calculated both Spearman and Kendall correlations (and took the average) is because according to Calvinn (our Data Scientist), Spearman correlations are more sensitive and therefore more prone to being swayed by small sample size and outliers. On the other hand, the Kendall rank correlation coefficient only takes order into account. So, it is more robust for small sample sizes and less sensitive to outliers.

Final thoughts

Improving your content score is unlikely to hurt Google rankings. After all, although the correlation between scores and rankings is weak, it’s still positive. Just don’t obsess and spend hours trying to get a perfect score; scoring in the same ballpark as top-ranking pages is enough.

You also need to be aware of their downsides, most notably that they can’t help you craft unique content. That requires human creativity and effort.

![How to Optimize for Google’s Featured Snippets [Updated for 2024]](https://moz.com/images/blog/Blog-OG-images/How-to-Optimize-for-Googles-Featured-Snippets-OG-Image.png?w=1200&h=630&q=82&auto=format&fit=crop&dm=1724004002&s=13df73104762982790dab6dc8328023f)